# Kubernetes部署文档

author:郁燕飞、韩皖

createTime:2022-05-20

updateTime:2022-08-17

# 系统准备工作

1.1 系统环境

| 主机名 | 内核 | Docker | ip | 主机名 | 配置 |

|---|---|---|---|---|---|

| server1 | centos 7.9 | 19.03.9 | 192.168.2.25 | master | 2核8G |

| server2 | centos 7.9 | 19.03.9 | 192.168.2.26 | node1 | 2核8G |

| server3 | centos 7.9 | 19.03.9 | 192.168.2.27 | node2 | 2核8G |

注意:请确保CPU至少2核,内存2G

1.2 各主机selinux和火墙均为关闭状态。

# systemctl stop firewalld.service

# systemctl disable firewalld.service

1.3 禁用SELINUX

临时禁用

#setenforce 0

永久禁用

#vim /etc/selinux/config # 或者修改/etc/sysconfig/selinux

SELINUX=disabled

1.4 关闭swap

临时关闭

# swapoff -a

永久关闭swap,重启后生效

#vim /etc/fstab # 注释掉以下字段

/dev/mapper/cl-swap swap swap defaults 0 0

1.5 修改k8s.conf文件

#cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

#sysctl --system

1.6 要求集群主机时间同步

#/usr/sbin/ntpdate us.pool.ntp.org

1.7 添加本地主机名

#vim /etc/hosts

192.168.2.25 master

192.168.2.26 node1

192.168.2.27 node2

# 安装Docker(所有节点)

所有节点都需要安装docker

每个节点都需要使docker开机自启

每个节点均部署镜像加速器

2.1 安装epel更新源

# yum install -y vim wget epel-release

2.2 使用 Docker 仓库进行安装

在新主机上首次安装 Docker Engine-Community 之前,需要设置 Docker 仓库。之后,您可以从仓库安装和更新 Docker。

设置仓库

安装所需的软件包。yum-utils 提供了 yum-config-manager ,并且 device mapper 存储驱动程序需要 device-mapper-persistent-data 和 lvm2。

#yum install -y yum-utils device-mapper-persistent-data lvm2

#yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

2.3 安装 Docker Engine-Community

在这里我们按照docker-ce-19.03.9-3.el7,docker-ce-cli-19.03.9-3.el7

要安装特定版本的 Docker Engine-Community,请在存储库中列出可用版本,然后选择并安装:

1、列出并排序您存储库中可用的版本。此示例按版本号(从高到低)对结果进行排序。

# yum list docker-ce --showduplicates | sort -r

…………

docker-ce.x86_64 3:20.10.0-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.9-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.8-3.el7 docker-ce-stable

…………

2、通过其完整的软件包名称安装特定版本,该软件包名称是软件包名称(docker-ce)加上版本字符串(第二列),从第一个冒号(:)一直到第一个连字符,并用连字符(-)分隔。例如:docker-ce-19.03.9-3.el7。

#yum install -y docker-ce-19.03.9-3.el7 docker-ce-cli-19.03.9-3.el7 containerd.io

3、修改docker默认存储路径

修改docker.service文件,在里面的EXECStart的后面增加后如下

#mkdir -p /data/docker

#vim /usr/lib/systemd/system/docker.service

……

ExecStart=/usr/bin/dockerd -H fd:// --graph /data/docker --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd

……

4、启动 Docker,开机自启动。

#systemctl start docker

#systemctl enable docker

2.4 配置Docker Hub 镜像加速器

#mkdir -p /etc/docker

#tee /etc/docker/daemon.json <<-'EOF'

{

"insecure-registries":["registry.lowaniot.com","registryvlan.lowaniot.com"],

"log-driver": "json-file",

"log-opts":{"max-size" :"50m","max-file":"3"}

}

EOF

#systemctl daemon-reload

#systemctl restart docker

#docker login registry.lowaniot.com -u admin -p HarborLowan250

#docker login registryvlan.lowaniot.com -u admin -p HarborLowan250

安装docker命令补全工具

#yum install -y bash-completion

# 安装kubelet、kubeadm 和 kubectl(所有节点)

官方安装文档可以参考 https://kubernetes.io/docs/setup/independent/install-kubeadm/

在各节点安装kubeadm,kubelet,kubectl,在master和node都需要操作的部分

3.1 修改yum安装源

#cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3.2 安装软件,centos7采用版本是:1.18.1

#yum install -y kubelet-1.18.1-0 kubeadm-1.18.1-0 kubectl-1.18.1-0

#systemctl enable kubelet

还不能启动kubelet,因为此时配置没产生,现在仅仅可以设置开机自启动。

# 初始化Master节点(master节点)

运行初始化命令

#kubeadm init --kubernetes-version=1.18.1 \

--apiserver-advertise-address=192.168.2.25 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

注意修改apiserver-advertise-address为master节点ip

参数解释:

–kubernetes-version: 用于指定k8s版本;

–apiserver-advertise-address:用于指定kube-apiserver监听的ip地址,就是 master本机IP地址。

–pod-network-cidr:用于指定Pod的网络范围; 10.244.0.0/16

–service-cidr:用于指定SVC的网络范围;

–image-repository: 指定阿里云镜像仓库地址

这一步很关键,由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址

集群初始化成功后返回如下信息:

记录生成的最后部分内容,此内容需要在其它节点加入Kubernetes集群时执行。

输出如下:

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.25:6443 --token axxu39.kmkycmnaf1kf1xs1 \

--discovery-token-ca-cert-hash sha256:18419b2326108aef1498a6e0959117975d8b59ebd7e3d409205257469fe9bbb9

注意保持好kubeadm join,后面会用到的。

# 配置kubenetes相关信息(master节点)

5.1 配置kubectl工具

#mkdir -p $HOME/.kube

#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

#chown $(id -u):$(id -g) $HOME/.kube/config

为了使用更便捷,启用 kubectl 命令的自动补全功能。

# echo "source <(kubectl completion bash)" >> ~/.bashrc

现在kubectl可以使用了

# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

5.2 修改kubernetes服务 nodeport 类型的端口范围

找到 --service-cluster-ip-range 这一行,在这一行的下一行增加 如下内容

- --service-node-port-range=1-65535

# vim /etc/kubernetes/manifests/kube-apiserver.yaml

……

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-cluster-ip-range=10.1.0.0/16

- --service-node-port-range=1-65535

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

……

5.3 imagePullSecrets如何生成及使用

公司的docker仓库(harbor),是私有的,需要用户认证之后,才能拉取镜像。

#kubectl create secret docker-registry registry-key --namespace=default --docker-server=registry.lowaniot.com --docker-username=admin --docker-password=HarborLowan250 --docker-email=yanfei.yu@lowaniot.com

# 安装pod网络----flannel(master节点)

要让 Kubernetes Cluster 能够工作,必须安装 Pod 网络,否则 Pod 之间无法通信。

Kubernetes 支持多种网络方案,这里我们先使用 flannel,后面还会讨论 Canal。

下载kube-flannel.yml文件:

#mkdir k8s

#cd k8s

#wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果yml中的"Network": "10.244.0.0/16"和kubeadm init xxx --pod-network-cidr不一样,就需要修改成一样的。不然可能会使得Node间Cluster IP不通。

由于我上面的kubeadm init xxx --pod-network-cidr就是10.244.0.0/16。所以此yaml文件就不需要更改了。

加载flannel

#kubectl apply -f kube-flannel.yml

查看Pod状态

等待几分钟,确保所有的Pod都处于Running状态

# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7ff77c879f-strdz 1/1 Running 1 17h 10.244.0.4 master <none> <none>

kube-system coredns-7ff77c879f-wwjcp 1/1 Running 1 17h 10.244.0.5 master <none> <none>

kube-system etcd-master 1/1 Running 1 17h 192.168.2.25 master <none> <none>

kube-system kube-apiserver-master 1/1 Running 1 17h 192.168.2.25 master <none> <none>

kube-system kube-controller-manager-master 1/1 Running 3 17h 192.168.2.25 master <none> <none>

kube-system kube-flannel-ds-5whtg 1/1 Running 1 22m 192.168.2.25 master <none> <none>

kube-system kube-proxy-7q8kk 1/1 Running 1 17h 192.168.2.25 master <none> <none>

kube-system kube-scheduler-master 1/1 Running 4 17h 192.168.2.25 master <none> <none>

注意:coredns容器的网段是10.244.0.0/16

# 安装dashboard(安装在master节点)

下载dashboard配置文件

#wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.1/aio/deploy/recommended.yaml

修改recommended.yaml,service新增type: NodePort 和 nodePort:31443,以便能实现非本机访问

#vim recommended.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 31443

selector:

k8s-app: kubernetes-dashboard

# kubectl apply -f recommended.yaml

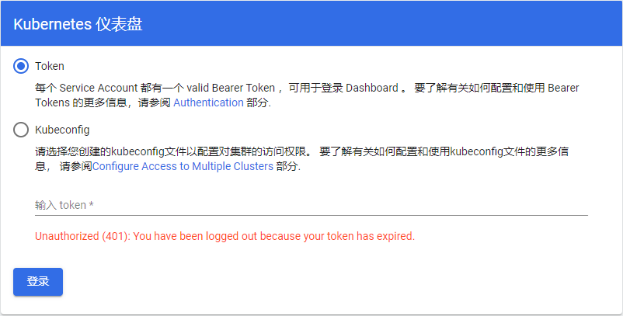

创建认证令牌(RBAC)

创建一个admin-user

# vim dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

# kubectl apply -f dashboard-adminuser.yaml

创建一个集群角色

# vim dashboard-ClusterRoleBinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

# kubectl apply -f dashboard-ClusterRoleBinding.yaml

获取token

\\# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-pstl4

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: a43e6f40-8f9c-4946-b55e-758361f35a05

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IndNVVBnUVhWSTl1Q05HMkduR0d5MXVWVFZmamFXTUttdWxXTVRRWnJPTkUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXBzdGw0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJhNDNlNmY0MC04ZjljLTQ5NDYtYjU1ZS03NTgzNjFmMzVhMDUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.uKKeSQ55ycg-ZLa06MgXI0h9H4oW7X0tDMr-iRFz8V4aQueWqAa-ib_Eddq8rDNni5WJvH0yWtbyDV6kmvxIqRMRLCCHCyenrjdXFxGs-9a_asStu8Kg7GYqD2a2CZBzrDk3lyVrETPvL0hnPeVtoJAmnfUbDgwk7pJCGueid1qoJ7wZnkicK3LFhlyc6qxX3ksI8dKz5fgg4TLEtt8uEj9bPEbRnJrMCTpu5vXvoGUquB7J-aps_7no2PiAj9fp0phqdqTMtnbETp39X4bkcKxXXgik84qGFpIcj4R2kj_c0gKKB9io5AJHLz6FR2cKRG3KTJZPY198E4rOfSaSKg

访问k8s集群UI,输入刚才获取的 token

https://192.168.2.25:31443/

# node加入集群 (node节点)

登录到node节点,确保已经安装了docker和kubeadm,kubelet,kubectl

#kubeadm join 192.168.2.25:6443 --token axxu39.kmkycmnaf1kf1xs1 \

--discovery-token-ca-cert-hash sha256:18419b2326108aef1498a6e0959117975d8b59ebd7e3d409205257469fe9bbb9

设置开机启动

#systemctl enable kubelet

解决 Kubernetes 中 Kubelet 组件报 failed to get cgroup 错误,修改配置

#vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

CPUAccounting=true

MemoryAccounting=true

登录到master,查看节点信息,使用命令查看

# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready master 17h v1.18.1 192.168.2.25 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.9

node1 Ready <none> 5m2s v1.18.1 192.168.2.26 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.9

node2 Ready <none> 118s v1.18.1 192.168.2.27 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://19.3.9

登录到node节点,查看ip

# ifconfig

...

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.1.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::80e7:9eff:fe5d:3d94 prefixlen 64 scopeid 0x20<link>

ether 82:e7:9e:5d:3d:94 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

...

它会创建一个flannel.1网卡,用来做flannel网络通信的。

# 测试,使用yml发布应用P010(master节点)

以P010为例子: 新建flaskapp-deployment.yaml

#cat P010.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: p010

spec:

selector:

matchLabels:

app: p010

run: p010

replicas: 1

template:

metadata:

labels:

app: p010

run: p010

spec:

imagePullSecrets:

- name: registry-key

volumes:

- name: timesync

hostPath:

path: /etc/localtime

- name: p010readmeterfiles

hostPath:

path: /data/app/P010/readMeterFiles

- name: p010log

hostPath:

path: /data/app/P010/log

containers:

- name: p010

image: registry.lowaniot.com/p010/alpine_p010:4.1.2

imagePullPolicy: IfNotPresent

env:

- name: InitData

value: 'false'

- name: DataUser

value: 'root'

- name: DataPwd

value: 'Lowan728%'

- name: DataUrl

value: '172.16.10.8'

- name: DataPort

value: '3306'

- name: Hesdb

value: 'hes_dev'

- name: loraserverprodinitialsize

value: '16'

- name: loraserverprodminidle

value: '16'

- name: loraserverprodmaxactive

value: '16'

- name: App003db

value: 'app003_dev'

- name: app003initialsize

value: '5'

- name: app003minidle

value: '5'

- name: app003maxactive

value: '20'

- name: HesLogdb

value: 'hes_log_dev'

- name: hesloginitialsize

value: '20'

- name: heslogminidle

value: '20'

- name: heslogmaxactive

value: '40'

- name: RedisPort1

value: '26380'

- name: RedisUrl1

value: '172.16.10.5'

- name: RedisPort2

value: '26380'

- name: RedisUrl2

value: '172.16.10.6'

- name: RedisPort3

value: '26380'

- name: RedisUrl3

value: '172.16.10.7'

- name: RedisPwd

value: '123456'

- name: redisdatabase

value: '2'

- name: MqttUrl

value: '192.168.20.184'

- name: MqttPwd

value: 'public'

- name: MqttUser

value: 'admin'

- name: ZkUrl

value: '192.168.20.35:2181'

- name: HostIP

value: '172.16.10.7'

- name: KafkaUrl

value: '172.16.10.7:9092,172.16.10.8:9092,172.16.10.9:9092'

- name: LANG

value: 'POSIX.utf8'

- name: CoreApi

value: '192.168.2.101:8080'

- name: Coreapiopen

value: 'false'

- name: ReadmeterTimeout

value: '30'

- name: ReadmeterAddtionalMaxTimeout

value: '10'

- name: Topic

value: 'task-dlms-0'

- name: infologmax

value: '200GB'

volumeMounts:

- name: p010log

mountPath: /opt/P010/log

- name: p010readmeterfiles

mountPath: /opt/P010/readMeterFiles

- name: timesync

mountPath: /etc/localtime

ports:

- containerPort: 21010

hostPort: 21010

nodeSelector:

slave: "4"

加载yml文件

# kubectl apply -f flaskapp-deployment.yaml

查看pod状态,等待几分钟,确保为Running状态

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

p010-767dcfff4-2s6nb 1/1 Running 0 4d1h 10.244.6.102 104 <none> <none>

ping pod ip,确保能正常ping通,表示flannel网络正常。

# ping 10.244.6.102 -c 3

PING 10.244.6.102 (10.244.6.102) 56(84) bytes of data.

64 bytes from 10.244.6.102: icmp_seq=1 ttl=63 time=1.86 ms

64 bytes from 10.244.6.102: icmp_seq=2 ttl=63 time=0.729 ms

64 bytes from 10.244.6.102: icmp_seq=3 ttl=63 time=1.05 ms

--- 10.244.6.102 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2001ms

rtt min/avg/max/mdev = 0.729/1.215/1.862/0.477 ms

测试页面

使用nodeport访问

# telnet 192.168.2.26 21010

# 安装资源指标监控 metrics-server(master节点)

下载metrics-server配置文件

# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

修改components.yaml,新增command以便能跳过验证kubelet的ca证书

#vim components.yaml

……

volumeMounts:

- mountPath: /tmp

name: tmp-dir

command:

- /metrics-server

- --kubelet-preferred-address-types=InternalIP

- --kubelet-insecure-tls

nodeSelector:

……

# kubectl apply -f components.yaml

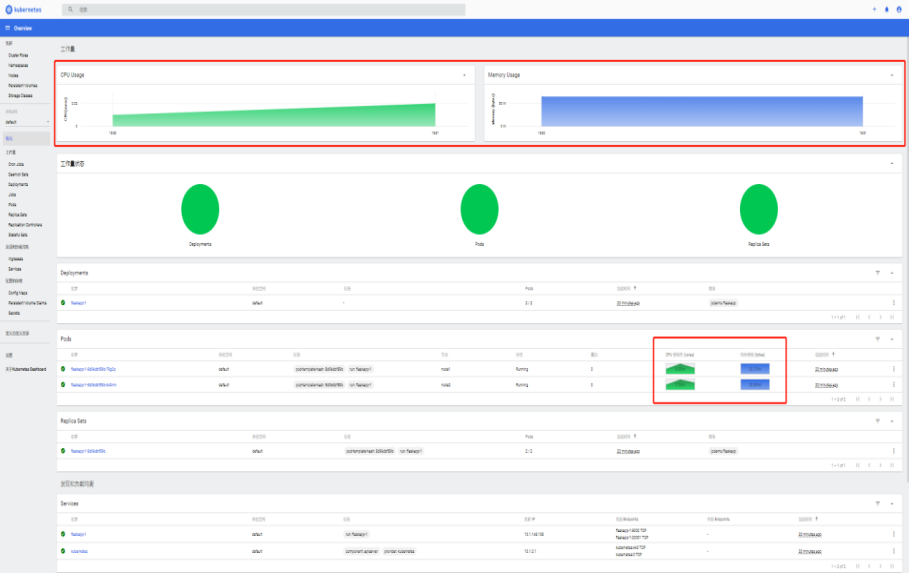

访问k8s集群UI,输入刚才获取的 token

https://192.168.2.25:31443/

登录后显示系统图表信息

# 添加labels,为了运行pod时指定哪台运行,业务需要添加(master节点)

kubectl get node --show-labels

kubectl label nodes node1 slave=1

kubectl label nodes node2 slave=2

← ELKF使用篇 kubernetes应用 →